Research

Here you can find information on my past and present research projects. The different categories are massively overlapping; I find that preferable to trying to figure out whether (e.g.) a paper on robustness in climate modeling belongs under “robustness” or “philosophy of climate science.” If you're just looking for links or a list of publications, I'd suggest heading over to the downloads tab.Data Visualization

Graphs are ubiquitous in the sciences: they're used in data analysis, in justifying conclusions, and (perhaps especially) in communicating results. Despite this, there's almost no discussion of graphs (qua graphs) in philosophy of science. I'm developing a book project on the subject, with the aim of answering four questions: (1) How should we understand graphs as vehicles of communication? (2) Why are graphs so useful in data analysis and communication? (3) What are the norms of graphical communication? (4) What can graphs tell us about (the philosophy of) statistics?Abstracts (click to expand):

- On the semantics of maps [in preparation]

- How do you Assert a Graph?

Casati and Varzi (1999) provide an influential account of “formal maps” on which regions represent objects and markers properties. In this paper, I invert their approach by treating markers as representing locatable objects and regions as representing locational properties of those objects. The syntax and semantics lends itself to a natural consequence relation patterned on that of model theory, and the resulting “map theory” is logically equivalent to a first-order language with a restricted range of constants and predicates. I then show how the account yields a neat explanation for the apparent differences between maps and sentences. The main difference between maps and sentences is the main difference between models and sentences: maps and models satisfy many propositions at once, while a sentence states a single one.

I extend the literature on norms of assertion to the ubiquitous use of graphs in scientific papers and presentations, which I term “graphical testimony.” On my account, the testimonial presentation of a graph involves commitment to both (a) the in-context reliability of the graph's framing devices and (b) the perspective-relative accuracy of the graph's content. My account resolves apparent tensions between the demands of honesty and common scientific practice of presenting idealized or simplified graphs: these “distortions” can be honest so long as there's the right kind of alignment between the distortion and the background beliefs and values of the audience. I end by suggesting that we should expect a similar relationship between perspectives and the norms of testimony in other non-linguistic cases and indeed in many linguistic cases as well.

Philosophy of Climate Science

There are two main themes to my work on climate science. First, that the common practice of treating model ensembles like statistical samples is not just defensible, but probably better than any alternative. Second, that the process of estimating the human contribution to warming can productively be understood as a kind of measurement process, replete with its own forms of calibration and robustness. I've also recently begun branching into climate economics, where I argue that there are deep philosophical questions that go beyond familiar debates about the choice of proper discount rate.

Abstracts:

- Methodologies of Uncertainty

- Stability in Climate Change Attribution

- Contrast Classes and Agreement in Climate Modeling

- Against “Possibilist” Interpretations of Climate Models

- Interpreting the Probabilistic Language in IPCC Reports

- Calibrating Statistical Tools

- When is an Ensemble like a Sample?

- Climate Models and the Irrelevance of Chaos

What probability distribution should we use when calculating the expected utility of different climate policies? There's a substantial literature on this question in economics, where it is largely treated as empirical or technical—i.e., the question is what distribution is justified by the empirical evidence and/or economic theory. The question has a largely overlooked methodological component, however—a component that concerns how (climate) economics should be carried out, rather than what the science tells us. Indeed, the major dispute in the literature is over precisely this aspect of the question: figures like William Nordhaus and Martin Weitzman disagree less about the evidence or the theory than they do about which possibilities we should consider when making political decisions—or offering economic advice—about climate change. There are two important implications. First, at least some of the economic literature misfires in attempting to treat the debate as open to empirical or technical resolution; a better path to progress on the question involves further investigating the policy recommendations that can be derived from the two positions. Second, the choice of discount rate is entangled with the choice of probability distribution: as both choices are responsive to the same normative reasons, we cannot evaluate the arguments in favour of a particular discount rate without considering the implications of those same arguments for the choice of distribution.

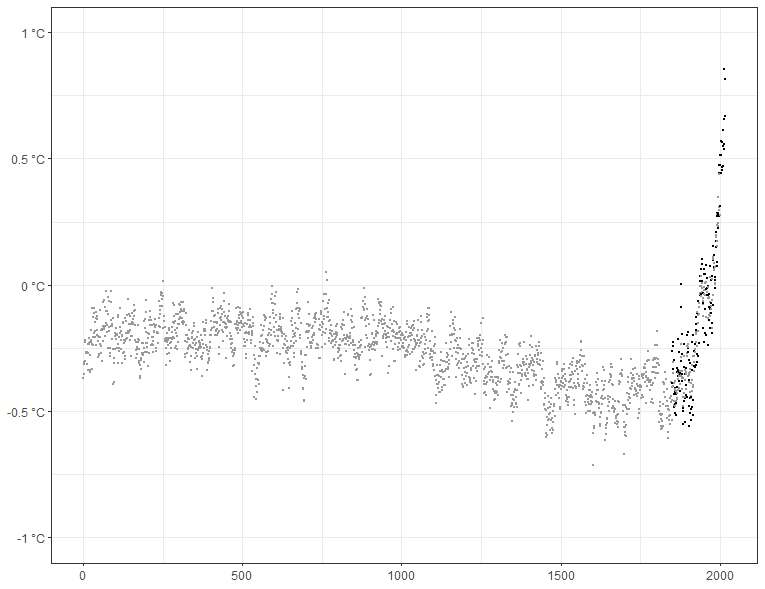

Climate change attribution involves measuring the human contribution to warming. In principle, inaccuracies in the characterization of the climate's internal variability could undermine these measurements. Equally in principle, the success of the measurement practice could provide evidence that our assumptions about internal variability are correct. I argue that neither condition obtains: current measurement practices do not provide evidence for the accuracy of our assumptions precisely because they are not as sensitive to inaccuracy in the characterization of internal variability as might be worried. I end by drawing some lessons about “robustness reasoning” more generally.

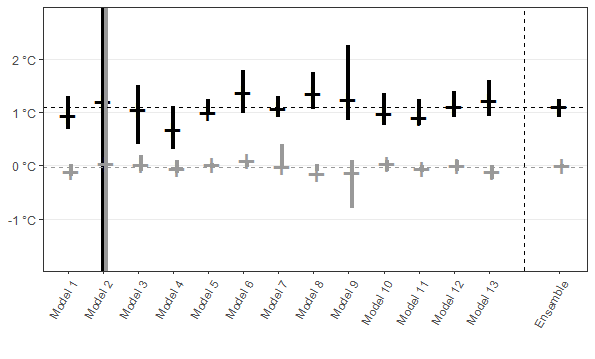

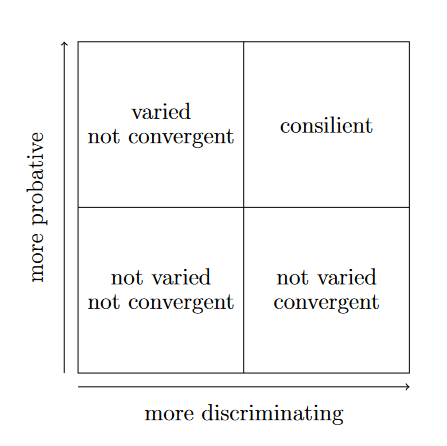

In an influential paper, Wendy Parker argues that agreement across climate models isn't a reliable marker of confirmation in the context of cutting-edge climate science. In this paper, I argue that while Parker's conclusion is generally correct, there is an important class of exceptions. Broadly speaking, agreement is not a reliable marker of confirmation when the hypotheses under consideration are mutually consistent—when, e.g., we're concerned with overlapping ranges. Since many cutting-edge questions in climate modeling require making distinctions between mutually consistent hypotheses, agreement across models will be generally unreliable in this domain. In cases where we are only concerned with mutually exclusive hypotheses, by contrast, agreement across climate models is plausibly a reliable marker of confirmation

Climate scientists frequently employ (groups of) heavily idealized models. How should these models be interpreted? A number of philosophers have suggested that a possibilist interpretation might be preferable, where this entails interpreting climate models as standing in for possible scenarios that could occur, but not as providing any sort of information about how probable those scenarios are. The present paper argues that possibilism is (a) undermotivated by the philosophical and empirical arguments that have been advanced in the literature, (b) incompatible with successful practices in the science, and (c) liable to present a less accurate picture of the current state of research and/or uncertainty than probabilistic alternatives. There are good arguments to be had about how precisely to interpret climate models but our starting point should be that the models provide evidence relevant to the evaluation of hypotheses concerning the actual world in at least some cases.

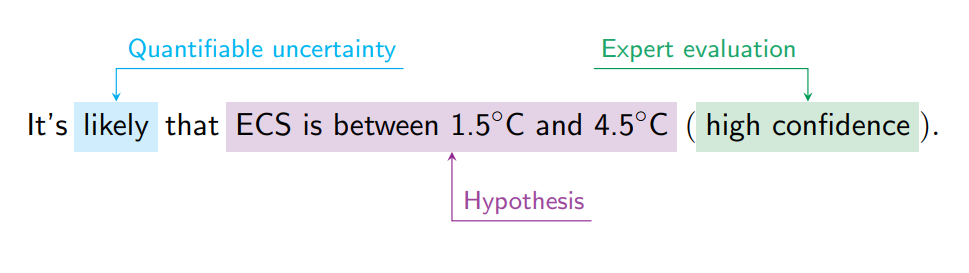

The Intergovernmental Panel on Climate Change (IPCC) often qualifies its statements by use of probabilistic “likelihood” language. In this paper, I show that this language is not properly interpreted in either frequentist or Bayesian terms—simply put, the IPCC uses both kinds of statistics to calculate these likelihoods. I then offer a deflationist interpretation: the probabilistic language expressones nothing more than how compatible the evidence is with the given hypothesis according to some method that generates normalized scores. I end by drawing some tentative normative conclusions.

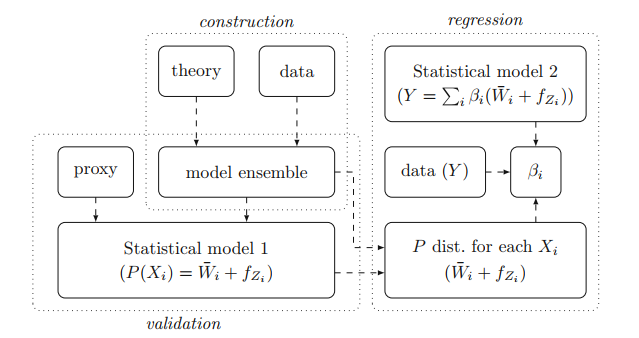

Over the last twenty-five years, climate scientists working on the attribution of climate change to humans have developed increasingly sophisticated statistical models in a process that can be understood as a kind of calibration: the gradual changes to the statistical models employed in attribution studies served as iterative revisions to a measurement(-like) procedure motivated primarily by the aim of neutralizing particularly troublesome sources of error or uncertainty. This practice is in keeping with recent work on the evaluation of models more generally that views models as tools for particular tasks: what drives the process is the desire for models that provide more reliable grounds for inference rather than accuracy to the underlying mechanisms of data-generation.

Climate scientists often apply statistical tools to a set of different estimates generated by an “ensemble” of models. In this paper, I argue that the resulting inferences are justified in the same way as any other statistical inference: what must be demonstrated is that the statistical model that licenses the inferences accurately represents the probabilistic relationship between data and target. This view of statistical practice is appropriately termed “model-based,” and I examine the use of statistics in climate fingerprinting to show how the difficulties that climate scientists encounter in applying statistics to ensemble-generated data are the practical difficulties of normal statistical practice. The upshot is that whether the application of statistics to ensemble-generated data yields trustworthy results should be expected to vary from case to case.

Philosophy of climate science has witnessed substantial recent debate over the existence of a dynamical or “structural” analogue of chaos, which is alleged to spell trouble for certain uses of climate models. In this paper, I argue that the debate over the analogy can and should be separated from its alleged epistemic implications: chaos-like behavior is neither necessary nor sufficient for small dynamical misrepresentations to generate erroneous results. I identify the relevant kind of kind of sensitivity with a kind of safety failure and argue that the resulting set of issues has different stakes than the extant debate would indicate.

Epistemology

Most of my work in epistemology concerns social epistemology, particularly (scientific) testimony. A recurring problem is how to square the idealizations, abstractions, and “expressive mistakes” common to testimony with the stringent norms of testimony found in the epistemology literature. I also have a side interest in formal epistemology. I'm convinced that there are very important lessons to be learned by examining the differences between the behavior of idealized epistemic agents and our actual epistemic practices (as exemplified by science)—that is, we can learn a lot about what makes various scientific practices valuable by looking at the gaps that they (attempt to) bridge between ourselves and agents found in formal models.

Abstracts:

- On the proof paradox [with Samuel C. Fletcher; in preparation]

- How do you Assert a Graph?

- The Cooperative Origins of Epistemic Rationality?

- Accuracy, Probabilism, and the Insufficiency of the Alethic

- Science, Assertion, and the Common Ground

A major problem in legal epistemology is the “proof paradox”: laypeople and professionals view judgments of culpability that rest on “bare statistical evidence” as unwarranted even when this evidence seems to provide the same or more support for the culpability of the defendant compared with what is required in other cases. We bring the perspective of philosophers of science to bear on this problem, arguing for two main conclusions. First, the concept of “bare statistical evidence” is crucially ambiguous between “statistical” in the sense of descriptive population statistics and “statistical” in the sense of inferential statistics. Descriptive population statistics cannot provide a reliable universal foundation for (legal) decision-making, but the same is not true of inferential statistics. Second, considerations of values analogous to those in science can, do, and should play a crucial role in the courts’ response to “bare statistical evidence.” Like (classical) statisticians, courts must balance the risks of different types of error, and we suggest that both the general practice and apparent exceptions can be explained by value judgments about the costs of different errors.

I extend the literature on norms of assertion to the ubiquitous use of graphs in scientific papers and presentations, which I term “graphical testimony.” On my account, the testimonial presentation of a graph involves commitment to both (a) the in-context reliability of the graph's framing devices and (b) the perspective-relative accuracy of the graph's content. My account resolves apparent tensions between the demands of honesty and common scientific practice of presenting idealized or simplified graphs: these “distortions” can be honest so long as there's the right kind of alignment between the distortion and the background beliefs and values of the audience. I end by suggesting that we should expect a similar relationship between perspectives and the norms of testimony in other non-linguistic cases and indeed in many linguistic cases as well.

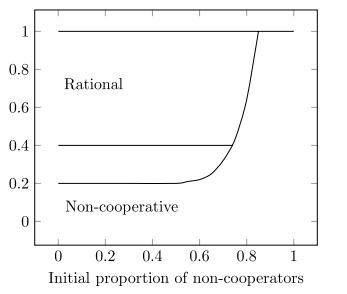

Recently, both evolutionary anthropologists and some philosophers have argued that cooperative social settings unique to humans play an important role in development of both our cognitive capacities and the “construction” of “normative rationality” or “a normative point of view as a self-regulating mechanism” (Tomasello 2017, 38). In this article, I use evolutionary game theory to evaluate the plausibility of the claim that cooperation fosters epistemic rationality. Employing an extension of signal-receiver games that I term “telephone games,” I show that cooperative contexts work as advertised: under plausible conditions, these scenarios favor epistemically rational agents over irrational ones designed to do just as well as them in non-cooperative contexts. I then show that the basic results are strengthened by introducing complications that make the game more realistic.

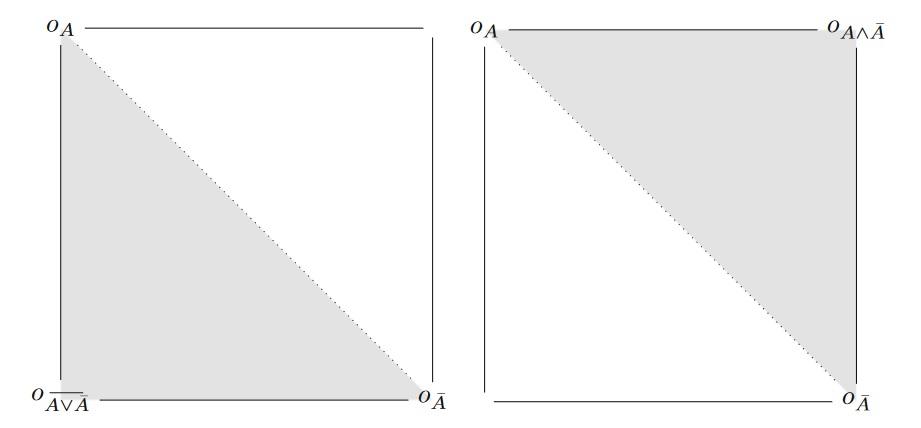

The best and most popular argument for probabilism is the accuracy-dominance argument, which purports to show that alethic considerations alone support the view that an agent's degrees of belief should always obey the axioms of probability. I argue that extant versions of the accuracy-dominance argument face a problem. In order for the mathematics of the argument to function as advertised, we must assume that every omniscient credence function is classically consistent; there can be no worlds in the set of dominance-relevant worlds that obey some other logic. This restriction cannot be motivated on alethic grounds unless we're also willing to accept that rationality requires belief in every metaphysical necessity, as the distinction between a priori logical necessities and a posteriori metaphysical ones is not an alethic one. To motivate the restriction to classically consistent worlds, non-alethic motivation is required. And thus, if there is a version of the accuracy-dominance argument in support of probabilism, it isn't one that is grounded in alethic considerations alone.

I argue that the appropriateness of an assertion is sensitive to context—or, really, the “common ground”—in a way that hasn't previously been emphasized by philosophers. This kind of context-sensitivity explains why some scientific (and philosophical) conclusions seem to be appropriately asserted even though they are not known, believed, or justified on the available evidence. I then consider other recent attempts to account for this phenomenon and argue that if they are to be successful, they need to recognize the kind of context-sensitivity that I argue for.

Philosophy of Statistics

My current postdoctoral project is on the epistemic foundations of classical statistics; Samuel C. Fletcher and I are currently developing multiple papers that connect statistics to familiar problems in epistemology and general philosophy of science. Outside of that project, my work on the subject primarily concerns the idealizations found in climate statistics. I have plans to extend my analysis to idealization in statistics more generally. Realistically, this is probably another book project that hooks up with my work on epistemology to ask what we can learn about statistics by comparing the idealized theory with the reality.

Abstracts:

- On inductive risk [with Samuel C. Fletcher; in preparation]

- On the proof paradox [with Samuel C. Fletcher; in preparation]

- Who's Afraid of the Base-Rate Fallacy

- Supposition and (Statistical) Models

- Calibrating Statistical Tools

- When is an Ensemble like a Sample?

We argue that classical statistical inference relies on risk evaluations that are precisely analogous to the tradeoff between false positives and negatives even in cases where the latter tradeoff is irrelevant. Crucially, however, these value-laden choices are constrained by a “consistency” constraint that ensures that the influence of values will wash out in the long run. We show that this formal result generalizes to qualitative cases and argue that consistency should be treated as a constraint on value judgments in science more broadly.

A major problem in legal epistemology is the “proof paradox”: laypeople and professionals view judgments of culpability that rest on “bare statistical evidence” as unwarranted even when this evidence seems to provide the same or more support for the culpability of the defendant compared with what is required in other cases. We bring the perspective of philosophers of science to bear on this problem, arguing for two main conclusions. First, the concept of “bare statistical evidence” is crucially ambiguous between “statistical” in the sense of descriptive population statistics and “statistical” in the sense of inferential statistics. Descriptive population statistics cannot provide a reliable universal foundation for (legal) decision-making, but the same is not true of inferential statistics. Second, considerations of values analogous to those in science can, do, and should play a crucial role in the courts’ response to “bare statistical evidence.” Like (classical) statisticians, courts must balance the risks of different types of error, and we suggest that both the general practice and apparent exceptions can be explained by value judgments about the costs of different errors.

This paper evaluates the back-and-forth between Mayo, Howson, and Achinstein over whether classical statistics commits the base-rate fallacy. I show that Mayo is correct to claim that Howson's arguments rely on a misunderstanding of classical theory. I then argue that Achinstein's refined version of the argument turns on largely undefended epistemic assumptions about “what we care about” when evaluating hypotheses. I end by suggesting that Mayo's positive arguments are no more decisive than her opponents': even if correct, they are unlikely to compel anyone not already sympathetic to the classical picture.

In a recent paper, Sprenger advances what he calls a “suppositional” answer to the question of why a Bayesian agent's credences should align with the probabilities found in statistical models. We show that Sprenger's account trades on an ambiguity between hypothetical and subjunctive suppositions and cannot succeed once we distinguish between the two.

Over the last twenty-five years, climate scientists working on the attribution of climate change to humans have developed increasingly sophisticated statistical models in a process that can be understood as a kind of calibration: the gradual changes to the statistical models employed in attribution studies served as iterative revisions to a measurement(-like) procedure motivated primarily by the aim of neutralizing particularly troublesome sources of error or uncertainty. This practice is in keeping with recent work on the evaluation of models more generally that views models as tools for particular tasks: what drives the process is the desire for models that provide more reliable grounds for inference rather than accuracy to the underlying mechanisms of data-generation.

Climate scientists often apply statistical tools to a set of different estimates generated by an “ensemble” of models. In this paper, I argue that the resulting inferences are justified in the same way as any other statistical inference: what must be demonstrated is that the statistical model that licenses the inferences accurately represents the probabilistic relationship between data and target. This view of statistical practice is appropriately termed “model-based,” and I examine the use of statistics in climate fingerprinting to show how the difficulties that climate scientists encounter in applying statistics to ensemble-generated data are the practical difficulties of normal statistical practice. The upshot is that whether the application of statistics to ensemble-generated data yields trustworthy results should be expected to vary from case to case.

Science Communication

Our communicative practices are by-and-large continuous: everyday talk shades into scientific communication properly-so-called, and scientific communication by way of spoken and written words shades into (and is often combined with) scientific communication by way of depictions like graphs and maps. These basic continuities constrian the norms that can be operative in the scientific and depictive context. My work on the subject explores these constraints and considers how these norms can be better realized in the practice of climate science.

Abstracts:

- How do you Assert a Graph?

- Interpreting the Probabilistic Language in IPCC Reports

- Science, Assertion, and the Common Ground

I extend the literature on norms of assertion to the ubiquitous use of graphs in scientific papers and presentations, which I term “graphical testimony.” On my account, the testimonial presentation of a graph involves commitment to both (a) the in-context reliability of the graph's framing devices and (b) the perspective-relative accuracy of the graph's content. My account resolves apparent tensions between the demands of honesty and common scientific practice of presenting idealized or simplified graphs: these “distortions” can be honest so long as there's the right kind of alignment between the distortion and the background beliefs and values of the audience. I end by suggesting that we should expect a similar relationship between perspectives and the norms of testimony in other non-linguistic cases and indeed in many linguistic cases as well.

The Intergovernmental Panel on Climate Change (IPCC) often qualifies its statements by use of probabilistic “likelihood” language. In this paper, I show that this language is not properly interpreted in either frequentist or Bayesian terms—simply put, the IPCC uses both kinds of statistics to calculate these likelihoods. I then offer a deflationist interpretation: the probabilistic language expressones nothing more than how compatible the evidence is with the given hypothesis according to some method that generates normalized scores. I end by drawing some tentative normative conclusions.

I argue that the appropriateness of an assertion is sensitive to context—or, really, the “common ground”—in a way that hasn't previously been emphasized by philosophers. This kind of context-sensitivity explains why some scientific (and philosophical) conclusions seem to be appropriately asserted even though they are not known, believed, or justified on the available evidence. I then consider other recent attempts to account for this phenomenon and argue that if they are to be successful, they need to recognize the kind of context-sensitivity that I argue for.

Robustness

I think that “robustness” is an incredibly simple phenomenon, at least from the perspective of confirmation theory: sometimes one hypothesis predicts that two or more data points will be observed together when all or most of its competitors would make that unlikely. Contra most of the rest of the literature on the subject, that's all there is to it. I've also be writing a paper that compares the different formal models of robustness on and off for the last few years; someday I will catch up with the literature's creation of new ones.Abstracts:

- Stability in Climate Change Attribution

- The Unity of Robustness

- Contrast Classes and Agreement in Climate Modeling

Climate change attribution involves measuring the human contribution to warming. In principle, inaccuracies in the characterization of the climate's internal variability could undermine these measurements. Equally in principle, the success of the measurement practice could provide evidence that our assumptions about internal variability are correct. I argue that neither condition obtains: current measurement practices do not provide evidence for the accuracy of our assumptions precisely because they are not as sensitive to inaccuracy in the characterization of internal variability as might be worried. I end by drawing some lessons about “robustness reasoning” more generally.

A number of philosophers of science have argued that there are important differences between robustness in modeling and experimental contexts, and—in particular—many of them have claimed that the former is non-confirmatory. In this paper, I argue for the opposite conclusion: robust hypotheses are confirmed under conditions that do not depend on the differences between and models and experiments—that is, the degree to which the robust hypothesis is confirmed depends on precisely the same factors in both situations. The positive argument turns on the fact that confirmation theory doesn't recognize a difference between different sources of evidence. Most of the paper is devoted to rebutting various objections designed to show that it should. I end by explaining why philosophers of science have (often) gone wrong on this point.

In an influential paper, Wendy Parker argues that agreement across climate models isn't a reliable marker of confirmation in the context of cutting-edge climate science. In this paper, I argue that while Parker's conclusion is generally correct, there is an important class of exceptions. Broadly speaking, agreement is not a reliable marker of confirmation when the hypotheses under consideration are mutually consistent—when, e.g., we're concerned with overlapping ranges. Since many cutting-edge questions in climate modeling require making distinctions between mutually consistent hypotheses, agreement across models will be generally unreliable in this domain. In cases where we are only concerned with mutually exclusive hypotheses, by contrast, agreement across climate models is plausibly a reliable marker of confirmation

History of (philosophy of) Science

Finally, I've worked on a few projects in the history of (philosophy of) science broadly construed. There's little that unifies these papers other than a (semi-) historical question that I found perplexing and couldn't find any satisfying solutions to in the literature.

Abstracts:

- Forces in a True and Physical Sense

- How to Do Things with Theory

- William Whewell's Semantic Account of Induction

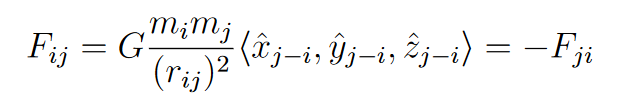

J. Wilson (2009), Moore (2012), and Massin (2017) identify an overdetermination problem arising from the principle of composition in Newtonian physics. I argue that the principle of composition is a red herring: what's really at issue are contrasting metaphysical views about how to interpret the science. One of these views—that real forces are to be tied to physical interactions like pushes and pulls—is a superior guide to real forces than the alternative, which demands that real forces are tied to “realized” accelerations. Not only is the former view employed in the actual construction of Newtonian models, the latter is both unmotivated and inconsistent with the foundations and testing of the science.

Pierre Duhem's influential argument for holism relies on a view of the role that background theory plays in testing: according to this still common account of “auxiliary hypotheses,” elements of background theory serve as truth-apt premises in arguments for or against a hypothesis. I argue that this view is mistaken. Rather than serving as truth-apt premises in arguments, auxiliary hypotheses are employed as (reliability-apt) “epistemic tools”: instruments that perform specific tasks in connecting our theoretical questions with the world but that are not (or not usually) premises in arguments. On the resulting picture, the acceptability of an auxiliary hypothesis depends not on its truth but on contextual factors such as the task or purpose it is put to and the other tools employed alongside it.

William Whewell's account of induction differs dramatically from the one familiar from 20th Century debates. I argue that Whewell's induction can be usefully understood by comparing the difference between his views and more standard accounts to contemporary debates between semantic and syntactic views of theories: rather than understanding inductive inference as capturing a relationship between sentences or propositions, Whewell understands it as a method for constructing a model of the world. The difference between this (“semantic”) view and the more familiar (“syntactic”) picture of induction is reflected in other aspects of Whewell's philosophy of science, particularly his treatment of consilience and the order of discovery.